=================================================================================

TensorFlow is a scalable and multiplatform programming interface for implementing

and running machine learning algorithms, including convenience wrappers for deep

learning. In fact, with the development of Theano and then TensorFlow, basic Python scripting skills suffice to do advanced deep-learning research. Therefore, after its release in early 2015, Keras quickly became the go-to deep-learning solution for large numbers of new startups, graduate students, and researchers pivoting into the field. TensorFlow is built around a computation graph which is composed of a set of nodes. Each

node represents an operation that may have zero or more input or output. The

values that flow through the edges of the computation graph are then called tensors.

In TensorFlow library, tensors are the building blocks as all computations are

done using tensors. Google’s TensorFlow team says, "A tensor is a generalization of vectors and matrices to potentially higher dimensions. Internally, TensorFlow represents tensors as n-dimensional arrays of base datatypes."

It is interesting to know that:

i) Tensors are the generalized form of scalar and vector.

ii) TensorFlow was initially built for only internal use at

Google, but it was subsequently released in November 2015 under a permissive open

source license.

iii) Tensorflow was developed by the researchers at the Google Brain team within Google AI organisation.

iv) TensorFlow is the most popular, iconic math deep learning framework.

v) TensorFlow is an open-source software library for high-performance numerical computation.

vi) To improve the performance of training machine learning models, TensorFlow allows

execution on both CPUs and GPUs. However, its greatest performance capabilities

can be discovered when using GPUs. TensorFlow supports CUDA-enabled GPUs

officially.

vii) TensorFlow currently supports frontend interfaces for a number of programming

languages. TensorFlow's Python API is currently the

most complete API, therefore attracting many machine learning and deep learning

practitioners. On the other hand, TensorFlow has an official API in C++.

viii) TensorFlow relies on building a computation graph at its core, and it uses this

computation graph to derive relationships between tensors from the input all the

way to the output.

Tensorflow has many applications:

i) TensorFlow is also used in data processing.

ii) TensorFlow is also used for machine learning and deep learning algorithms, including neuro-linguistic programming (NLP).

iii) TensorFlow has gained

a lot of popularity among machine learning researchers, who use it to construct

deep neural networks because of its ability to optimize mathematical expressions

for computations on multi dimensional arrays utilizing Graphical Processing Units

(GPUs).

iv) TensorFlow is being used by physicists for complex mathematical computations.

v) Training (see page4164).

vi) scikit-learn and TensorFlow can be used to solve problems related to regression.

vii) Saving and deploying TensorFlow models in

production.

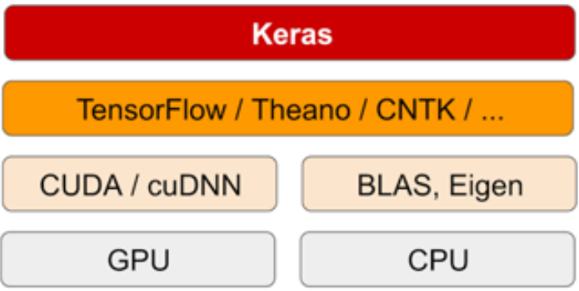

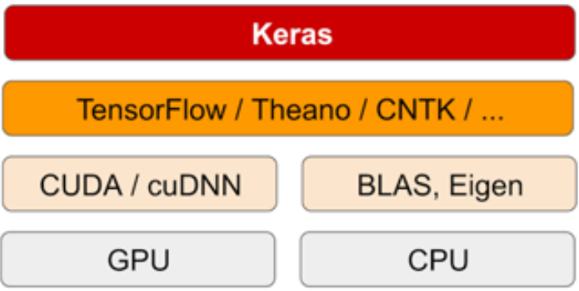

The Keras handles the

problem in a modular way as shown in Figure 4243a.

Figure 4243a. The deep-learning

software and hardware stack in Keras process. [1]

The following operating systems support TensorFlow:

i) macOS 10.12.6 (Sierra) or later.

ii) Ubuntu 16.04 or later.

iii) Windows 7 or above.

iv) Raspbian 9.0 or later.

To use TensorFlow, you don't need to explicitly require the

use of a GPU. However, TensorFlow will automatically try to use it if you have a GPU. On the other hand, if you have

more than one GPU, you must assign operations to each GPU explicitly, or only the first

one will be used. To do this, you simply need to type the line as following:

with tensorflow.device("/gpu:1"):

"/cpu:0": the main CPU of your machine

"/gpu:0": the first GPU of your machine, if one exists

"/gpu:1": the second GPU of your machine, if a second exists

"/gpu:2": the third GPU of your machine, if a third exists, and so on.

Ways to use TensorFlow are:

i) The most convenient way to use TensorFlow is Colab (see page4136). Colab is provided by Google’s

TensorFlow team.

ii) Use it through the Databricks platform (see page4135).

Thank Google for backing the Keras project since it has been fantastic to

see Keras adopted as TensorFlow’s high-level API. A smooth integration between Keras

and TensorFlow greatly benefits both TensorFlow users and Keras users and makes

deep learning accessible to most. With open source availability, more and more people in the artificial

intelligence (AI) and machine learning communities are able to adopt

TensorFlow and build features and products on top of it. This availability not only

helped users with implementation of standard machine learning and

deep learning algorithms but also allowed the people to implement customized and differentiated versions of algorithms for business applications and various research projects. This popularity is principally due to the fact that Google itself uses

TensorFlow in most of its products, like Google Maps, Gmail,

and so on.

TensorFlow's API is highly flexible and is used for tasks ranging from developing complex neural networks to performing standard machine learning. Key features of the TensorFlow API include:

- High-level APIs such as Keras for building and training deep learning models using straightforward, modular building blocks.

- Low-level APIs for fine control over model architecture and training processes, which are useful for researchers and developers needing to create custom operations.

- Data API for building efficient data input pipelines, which can preprocess large amounts of data and augment datasets dynamically.

- Distribution Strategy API for training models on different hardware configurations, including multi-GPU and distributed settings, facilitating scalability and speed.

- TensorBoard for visualization, which helps in monitoring the training process by logging metrics like loss and accuracy, as well as visualizing model graph.

Table 4243. Comparison between TensorFlow 1.0 and. TensorFlow 2.0.

| |

TensorFlow 1.0 |

TensorFlow 2.0 |

TensorFlow Enterpris 2.10 |

| Version |

Free |

Free |

Commercial |

| TensorFlow-io |

|

|

Included |

| TensorFlow-estimator |

|

|

Included |

| TensorFlow-probability |

|

|

Included |

| TensorFlow-datasets |

|

|

Included |

| TensorFlow-hub |

|

|

Included |

| fairness-indicators |

|

|

Included |

User-friendliness |

Less user-friendly |

More user-friendly |

|

Usability-related changes |

APIs |

Not user-friendly and is graph control–based so that users were not able to debug |

Simpler with two levels APIs: high-level (easier debugging) and lower-level (offers much more

flexibility and configuration capability);

Make it very easy to import any new

data source |

|

Documentation |

|

Improved |

|

Data sources |

|

More inbuilt:

• Text: imdb_reviews, squad

• Image: mnist, imagenet2012 , coco2014, cifar10

• Video: moving_mnist, starcraft_video, bair_robot_pushing_small

• Audio: Nsynth

• Structured: titanic, iris |

|

Performance-related modifications |

Deployment-related modifications |

|

Improved its speed two times, on average. |

|

Pain points |

|

A lot of pain points in 1.0 are addressed with eager mode and AutoGraph features |

|

Eager execution |

No |

Yes |

|

| |

Doesn’t require the graph definition |

|

| |

Doesn’t make it mandatory to

initialize variables |

|

| |

Doesn’t require variable sharing via

scopes |

|

| Need tf.Session |

A tf.Session is not needed to run a code |

|

| The lifetime

is managed by the tf.Session object |

The lifetime of state objects is determined by the lifetime of

their corresponding Python object |

|

import tensorflow as tf

tfs=tf.InteractiveSession()

c1=tf.constant(10,name='x')

Tensor("x:0", shape=(), dtype=int32)

tfs.run(c1)

c2=tf.constant(5.0,name='y')

c3=tf.constant(7.0,tf.float32,name='z')

MyOp1=tf.add(c2,c3)

MyOp2=tf.multiply(c2,c3)

tfs.run(MyOp1)

tfs.run(MyOp2)

g = tf.Graph()

with g.as_default():

a = tf.constant([[15,17],[19.,1.]])

x = tf.constant([[5.,0.],[0.,1.]])

b = tf.Variable(18.)

y = tf.matmul(a, x) + b

init_op = f.global_variables_initializer()

with tf.Session() as sess:

sess.run(init_op)

print(sess.run(y)) |

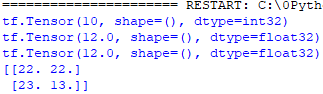

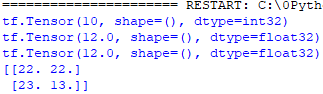

import tensorflow as tf

myConstant = tf.constant(10)

print(myConstant)

Output: tf.Tensor(10, shape=(), dtype=int32)

a = tf.constant([[15,17],[19.,1.]])

x = tf.constant([[5.,0.],[0.,1.]])

b = tf.Variable(18.)

y = tf.matmul(a, x) + b

print(y.numpy())

Code

Output:

|

|

tf.function |

No such decorator |

Decorator (@tf.function) (page4138) |

|

tf.keras |

|

Under the hood, the integration of Keras

with new features of TensorFlow 2.0 |

|

| Run a session under the hood |

Run in eager

mode so that it becomes easy to debug the cod |

|

Redundancy |

Too many redundant components (creating confusion); a lot of duplicative classes |

Has removed all the redundant elements and

now comes with just one set of optimizers, metrics, losses, and layers |

|

Typically, a custom object detector for problems in machine learning with Tensorflow needs a large enough image dataset.

In general, a single iteration of the model function is not aware of whether training is going to end after it runs.

The t-SNE algorithm can also be used in TensorFlow.js to reduce dimensions in an input

dataset.

k-means algorithm can be used in TensorFlow.js to visualize prediction results.

Comparing with TensorFlow 1.0, TensorFlow 2.0 has removed some of the

previous hurdles so that developers can use TensorFlow even

more seamlessly.

Every Python module should contain an init_.py file (statement) in every folder when you package up a TensorFlow model as a Python package.

============================================

[1] François Chollet, Deep Learning with Python, 2018.

|