Comparison of Regression Classes - Python for Integrated Circuits - - An Online Book - |

|||||||||||||||||||||

| Python for Integrated Circuits http://www.globalsino.com/ICs/ | |||||||||||||||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | |||||||||||||||||||||

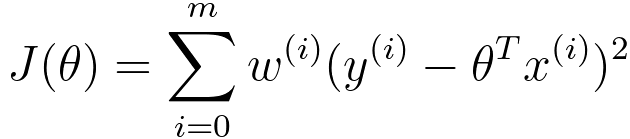

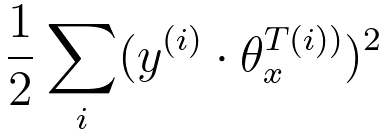

================================================================================= Regression and regression equation in data analysis. ============================================ Regression analysis is a versatile statistical technique that can be used in a wide range of cases beyond normal distribution (continuous response) and binomial distribution (binary response). There are many different types of regression techniques used in statistics and machine learning, each with its own characteristics and assumptions. Table 4154. Classes of regression.

============================================

[1] Domonkos Varga, No-Reference Video Quality Assessment Based on Benford’s Law and Perceptual Features, Electronics 2021, 10, 2768.

|

|||||||||||||||||||||

| ================================================================================= | |||||||||||||||||||||

|

|

|||||||||||||||||||||

, then return

, then return