=================================================================================

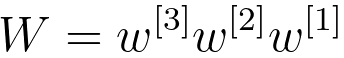

Activation functions play a crucial role in introducing non-linearity to the network, allowing it to learn complex patterns and relationships in the data. Different activation functions have different characteristics, and their choice can impact the model's ability to capture and generalize from the training data.

Here are a few commonly used activation functions and their characteristics:

-

ReLU (Rectified Linear Unit):

- Pros: Simple, computationally efficient, and helps with the vanishing gradient problem.

- Cons: Can suffer from the "dying ReLU" problem (neurons becoming inactive during training).

- Sigmoid:

- Pros: Squeezes output between 0 and 1, useful in binary classification problems.

- Cons: Prone to the vanishing gradient problem and not zero-centered.

- Tanh (Hyperbolic Tangent):

- Pros: Similar to the sigmoid but zero-centered, which helps mitigate the vanishing gradient problem to some extent.

- Cons: Still vulnerable to vanishing gradients.

- Leaky ReLU:

- Pros: Addresses the dying ReLU problem by allowing a small, non-zero gradient when the input is negative.

- Cons: May not always outperform standard ReLU, and the choice of the leakage parameter is crucial.

- Softmax:

- Used in the output layer for multi-class classification problems, as it converts raw scores into probability distributions.

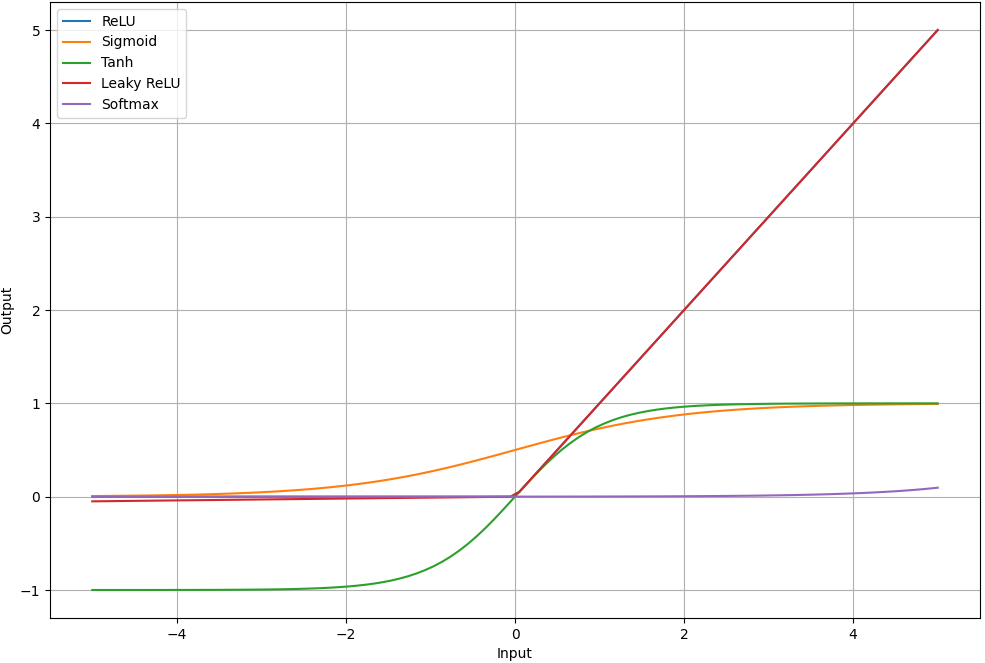

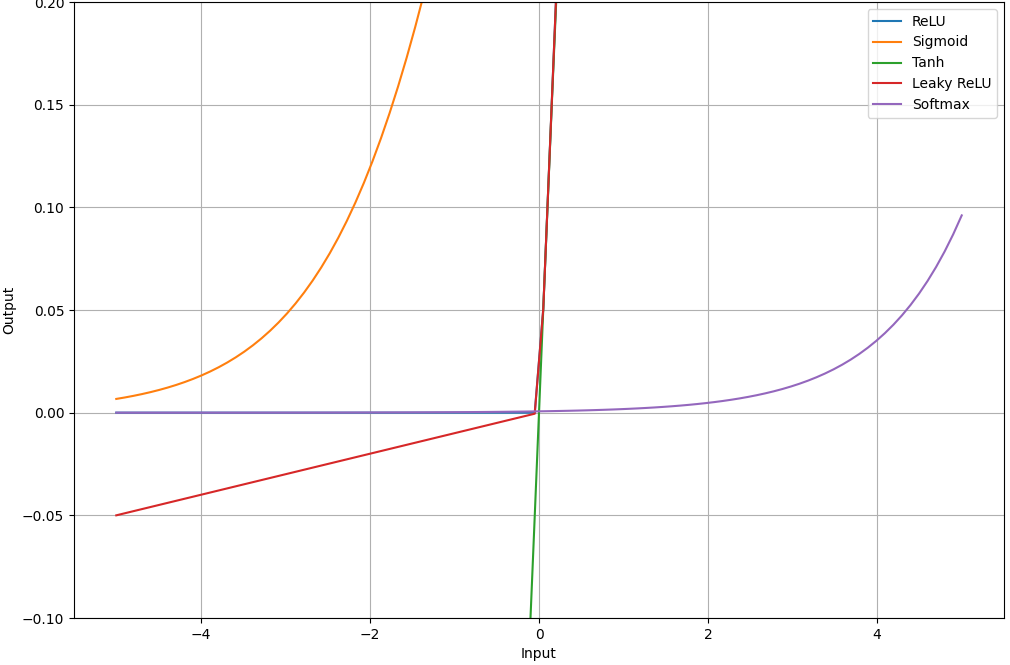

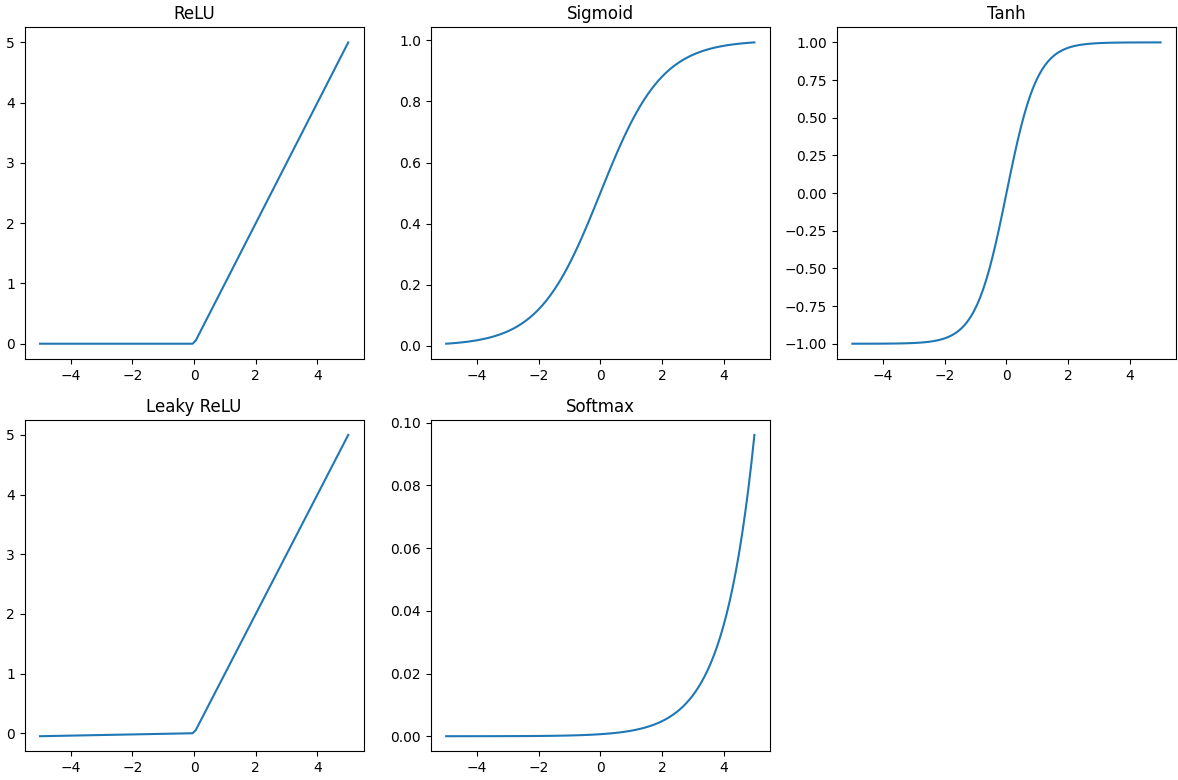

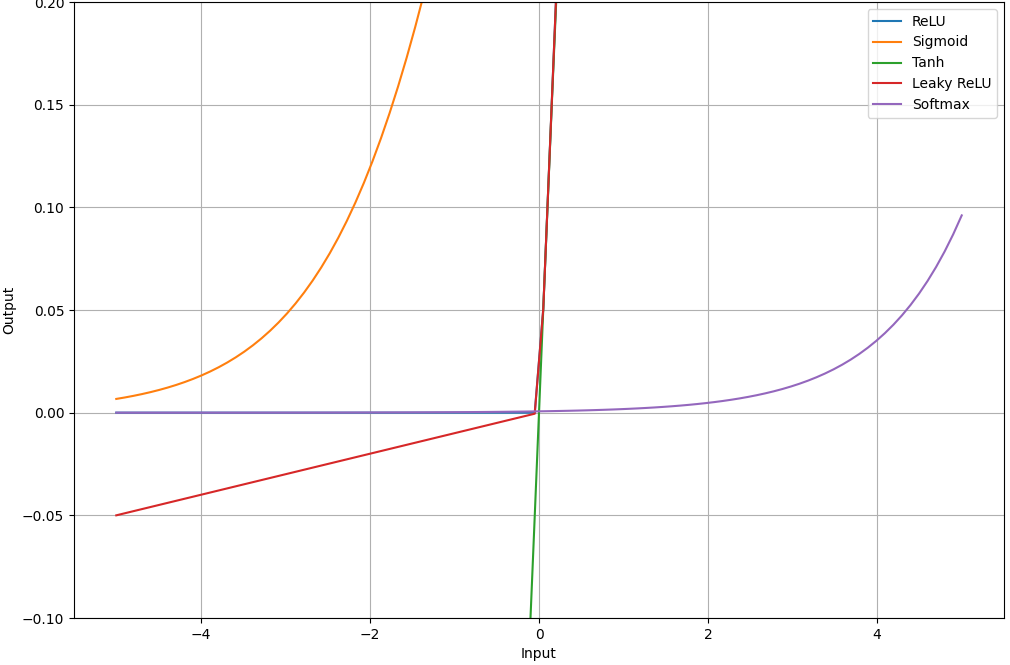

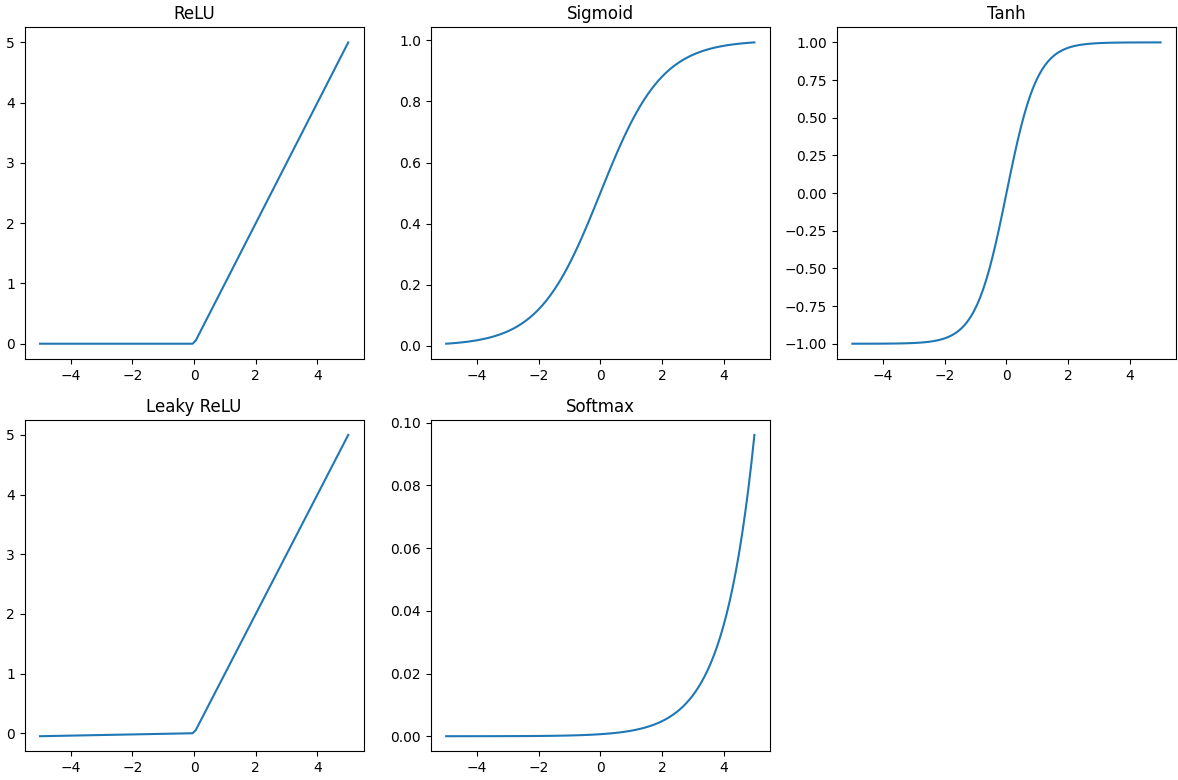

Figure 4083 shows the ReLU, Sigmoid, Tanh, Leaky ReLU and Softmax.

(a)

(b)

(c)

| Figure 4083. ReLU, Sigmoid, Tanh, Leaky ReLU and Softmax: (a) and (b) in different y-axis scales, and (c) Plot separately (code). |

Non-saturating, non-linear activation functions such as ReLUs can be used to fix the successive reduction of signal vs. noise caused by each additional layer in the network during the training process.

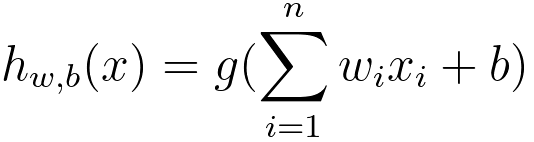

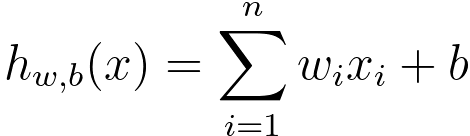

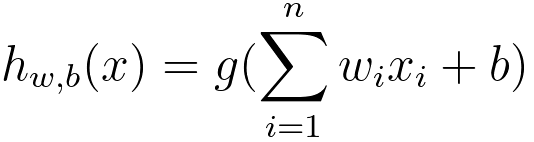

As discussed in support-vector machines (SVM), we have,

--------------------------------- [4083a] --------------------------------- [4083a]

where,

g is the activation function.

n is the number of input features.

Equation 4083a is a basic representation of a single-layer neural network, also known as a perceptron or logistic regression model, depending on the choice of the activation function g. From Equation 4083a, we can derive different forms or variations by changing the activation function, the number of layers, or the architecture of the neural network as shown in Table 4083a.

Table 4083a. Different forms or variations of Equation 4083a.

| Algorithms |

Details |

| Linear Regression |

Set g(z) = z (identity function).

This simplifies the equation to  , which is the formula for linear regression. , which is the formula for linear regression. |

| Logistic Regression |

Set g(z) = 1 / (1 + e(-z)) (the sigmoid function).

This is a binary classification model, and the equation becomes the logistic regression model. |

| Multi-layer Neural Network |

You can add more layers to the network by introducing new sets of weights and biases, and applying activation functions at each layer. This leads to a more complex model. |

| Different Activation Functions |

You can choose different activation functions for different characteristics of your model. For example, you can use ReLU, tanh, or other non-linear activation functions instead of the sigmoid function. |

| Deep Learning Architectures |

You can create more complex neural network architectures, such as convolutional neural networks (CNNs) for image data or recurrent neural networks (RNNs) for sequential data. |

| Regularization |

You can add regularization terms, such as L1 or L2 regularization, to the loss function to prevent overfitting. |

Activation functions play a crucial role in neural networks for several reasons:

-

Introduction of Non-Linearity: Activation functions introduce non-linearity into the network. Without non-linear activation functions, the entire neural network would behave just like a single-layer perceptron, regardless of its depth. The stacking of linear operations would result in a linear transformation overall. Introducing non-linearity allows neural networks to learn and represent complex, non-linear relationships in the data.

-

Complex Mapping of Input to Output: Neural networks are powerful because they can learn complex mappings from inputs to outputs. Non-linear activation functions enable the neural network to model and understand intricate patterns and relationships in the data.

-

Gradient Descent Optimization: Activation functions help in the optimization process during training through backpropagation. They introduce gradients that are used to update the weights of the network during the learning process. The ability to compute derivatives or gradients is crucial for optimization algorithms, such as gradient descent, to adjust the weights and minimize the error.

-

Normalization of Outputs: Activation functions often normalize the output of a neuron, limiting it to a specific range. This normalization can be important for preventing values from growing too large, which could lead to numerical instability or saturation in the network.

-

Sparse Representation: Some activation functions, like rectified linear units (ReLUs), encourage sparse representations in the network. This sparsity can lead to more efficient learning and representation of features.

-

Learning Complex Features: Non-linear activation functions allow neural networks to learn hierarchical and complex features. Different layers of the network can learn to represent increasingly abstract features, capturing intricate patterns in the data.

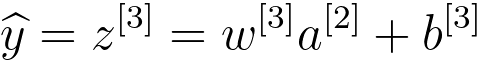

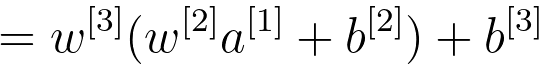

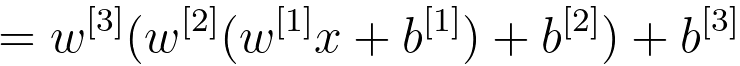

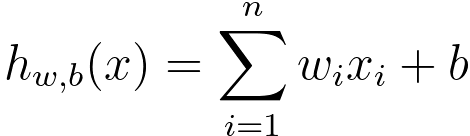

If you don't use an activation function in a neural network, the entire network would essentially behave like a linear regression model, regardless of its depth or the number of layers. This is because the composition of linear operations remains linear, for instance, in the case of the neural network for dog identification at page4214, we have:

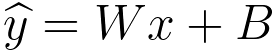

--------------------------------- [4083b] --------------------------------- [4083b]

--------------------------------- [4083c] --------------------------------- [4083c]

--------------------------------- [4083d] --------------------------------- [4083d]

--------------------------------- [4083e] --------------------------------- [4083e]

where,

--------------------------------- [4083f] --------------------------------- [4083f]

--------------------------------- [4083g] --------------------------------- [4083g]

In a neural network without activation functions, each layer would compute a linear transformation of the input, and stacking multiple such layers would still result in a linear transformation. The overall network would effectively be a linear model, and its expressive power would be limited to learning linear relationships in the data.

The purpose of activation functions is to introduce non-linearity into the network, allowing it to learn and represent complex, non-linear relationships in the data. Activation functions enable the neural network to model and capture more intricate patterns, making it a powerful tool for tasks that involve non-linearities, such as image recognition, natural language processing, and many other real-world problems.

To a significant extent, the complexity and expressive power of a neural network are influenced by the choice of activation functions. The activation functions introduce non-linearity, enabling the network to learn and represent complex patterns and relationships in the data. The complexity of a neural network comes from its ability to model and capture intricate, non-linear features in the input data. Without activation functions, the network would be limited to linear transformations, and its capacity to represent complex relationships would be severely restricted.

Different activation functions introduce different types of non-linearities, and the choice of activation function can impact the network's performance on specific tasks. For instance, rectified linear units (ReLUs) and their variants are popular choices because they allow the network to learn sparse and non-linear representations. However, the trade-offs between different activation functions depend on the characteristics of the data and the nature of the problem being solved.

It should be mentioned that the field of neural networks and deep learning involves many complex interactions and dependencies so that there might not be a complete and universal understanding of why certain activation functions work better in all cases; however, researchers and practitioners have gained substantial insights into the behavior of different activation functions:

-

Empirical Evidence: The choice of activation function often depends on the specific task and the characteristics of the data. Empirical evidence, gathered through experimentation, plays a crucial role in determining which activation functions work well for a given problem. For example, Rectified Linear Unit (ReLU) and its variants are widely used due to their success in practice.

-

Theoretical Understanding: While the complete theoretical understanding of why certain activation functions work better than others may not be fully established, there are theoretical aspects that shed light on their properties. For instance, the non-linearity introduced by activation functions allows neural networks to learn complex relationships between inputs and outputs.

-

Vanishing and Exploding Gradients: The choice of activation function can influence the training dynamics of a neural network. Activation functions like sigmoid and tanh are prone to vanishing gradient problems, which can impede training in deep networks. ReLU-type activation functions help mitigate these issues.

-

Expressiveness: The expressiveness of a neural network, i.e., its ability to represent complex functions, is influenced by the choice of activation functions. Certain functions may be better suited for capturing specific patterns in the data.

-

Computational Efficiency: The computational efficiency of training is an important consideration. Some activation functions are computationally more efficient, which can impact the speed of training and inference.

============================================

|

--------------------------------- [4083a]

--------------------------------- [4083a]  , which is the formula for linear regression.

, which is the formula for linear regression.