Layers in Training Process - Python for Integrated Circuits - - An Online Book - |

||||||||

| Python for Integrated Circuits http://www.globalsino.com/ICs/ | ||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | ||||||||

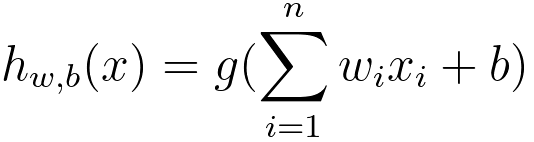

================================================================================= Non-saturating, non-linear activation functions such as ReLUs can be used to fix the successive reduction of signal vs. noise caused by each additional layer in the network during the training process. Regularization can help build generalizable models by adding dropout layers to neural networks. As discussed in support-vector machines (SVM), we have, Equation 4081 is a basic representation of a single-layer neural network, also known as a perceptron or logistic regression model, depending on the choice of the activation function g. ============================================

|

||||||||

| ================================================================================= | ||||||||

|

|

||||||||

--------------------------------- [4081]

--------------------------------- [4081]