Joint Probability Distribution - Python Automation and Machine Learning for ICs - - An Online Book - |

||||||||

| Python Automation and Machine Learning for ICs http://www.globalsino.com/ICs/ | ||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | ||||||||

================================================================================= In machine learning and probability theory, a joint probability distribution refers to the probability distribution that describes the simultaneous occurrence of multiple random variables. It quantifies the likelihood of specific outcomes or combinations of outcomes for all the random variables considered together. Let's break down the notation "p over the space of x times y":

For example, if you have two random variables, "x" representing the temperature and "y" representing humidity, the joint probability distribution "p(x, y)" would describe how likely it is for specific temperature-humidity pairs to occur together. It specifies the probability of observing a particular temperature and humidity value simultaneously. The joint probability distribution is fundamental in various machine learning tasks, including probabilistic modeling, Bayesian inference, and graphical models like Bayesian networks. It provides a way to model and reason about the relationships between multiple random variables in a probabilistic manner. A joint probability distribution can be explained using a table known as a probability distribution table. This table shows the probabilities associated with different combinations of values for multiple random variables. Let's use a simple example to illustrate this concept. Suppose we have two discrete random variables, "X" and "Y," each with three possible outcomes. We want to calculate and represent their joint probability distribution. Here's a probability distribution table for "X" and "Y": | X | Y | P(X, Y) | In this table:

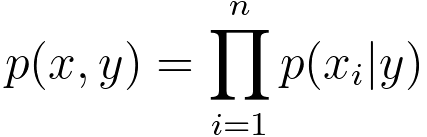

For instance, if we wanted to find the joint probability of "X" taking the value x2 and "Y" taking the value y3, we would look at the cell in the table corresponding to row "x2" and column "y3," which would give us P(X=x2, Y=y3). This table allows you to quantify and visualize the joint probabilities of all possible combinations of values for the random variables "X" and "Y," providing a comprehensive representation of their joint probability distribution. The joint probability distribution of n random variables x1, x2, ..., xn, conditioned on the variable y can be given by, The equation states that the joint probability distribution of all the x variables, denoted as p(x, y), can be expressed as the product of the conditional probability distributions of each x variable given y, i.e., p(xi|y), where i ranges from 1 to n. Product rule in probability theory gives, where,

This equation expresses how the joint probability of two events or random variables and can be calculated in terms of the conditional probability of given and the marginal probability of (y). ============================================

|

||||||||

| ================================================================================= | ||||||||

|

|

||||||||

-------------------------------- [3989a]

-------------------------------- [3989a]