=================================================================================

The two types of hypothesis classes ( "finite hypothesis class" and "infinite hypothesis class") are used in machine learning or data science:

-

Finite Hypothesis Class: In this case, the hypothesis class (often denoted as H) is assumed to be finite. A hypothesis class represents the set of possible models or functions that a machine learning algorithm can choose from when trying to learn a relationship between input data and output. When the hypothesis class is finite, it means that there are a limited number of potential models or functions to choose from. Conceptually, a finite hypothesis class H can be represented as a set of distinct hypotheses or models. Suppose H has "m" hypotheses. You can represent it as: H = {h₁, h₂, ..., hₘ}.

-

Infinite Hypothesis Class: Conversely, an "infinite hypothesis class" implies that the set of potential models or functions is not limited and can be infinite. This can be the case when, for example, the algorithm can consider an unbounded number of different models or functions to fit the data. An infinite hypothesis class H typically doesn't have a finite, enumerable list of hypotheses. It can include an infinite number of possible hypotheses. There is no specific equation to represent this class; it's described by the fact that it's unbounded.

The choice of hypotheses from these classes and the equations associated with them would depend on the specific machine learning algorithm or model being used. For example, in linear regression, a hypothesis might be represented as:

h(x) = θ₀ + θ₁x₁ + θ₂x₂ + ... + θₙxₙ

-------------------------------------------- [3956a]

where h(x) is the predicted output, θ₀, θ₁, θ₂, ..., θₙ are parameters to be learned, and x₁, x₂, ..., xₙ are input features.

The equations and representations would vary based on the problem and the chosen learning algorithm, but the concept of finite and infinite hypothesis classes remains consistent.

Table 3956 lists the finite hypothesis class versus infinite hypothesis class. The flexibility of a finite hypothesis class refers to its ability to represent and capture the underlying patterns or relationships in the data.

Table 3956. Finite hypothesis class versus infinite hypothesis class.

| |

Finite hypothesis class |

Infinite hypothesis class |

| Number of classes |

|H| = R |

|

| Probability |

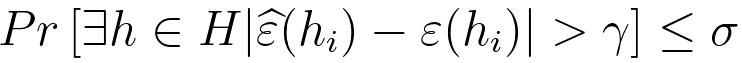

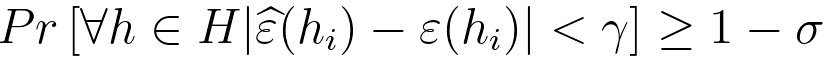

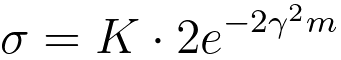

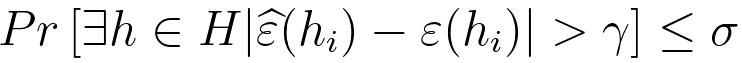

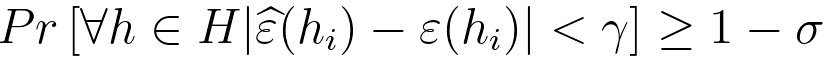

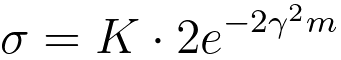

With uniform convergence and union bound, we can have,

The inequality expresses a bound on the probability that, for all hypotheses hi in the hypothesis class H, the difference between the empirical error and the true error is less than γ. The inequality basically tells us that once the sample size is fixed, then the other parameters can be evaluated to minimize the risks.

H: The hypothesis class, which represents the set of possible models or functions that the learning algorithm can choose from.

γ: A parameter that measures the acceptable deviation between the empirical error and the true error.

K: A constant that represents the confidence level.

|

|

| Options |

Limited Options:

- Finite Set: The hypothesis class consists of a finite, countable number of hypotheses.

- Limited Expressiveness: The model has a restricted capacity to represent complex relationships in the data.

|

Unlimited Options:

- Infinite Set: The hypothesis class contains an infinite number of possible hypotheses.

- Higher Expressiveness: The model can potentially capture more complex relationships in the data.

|

| Computational Advantages |

- Easier to Manage: Handling a finite set of hypotheses is computationally more feasible and often simpler.

- Less Computational Cost: Training and evaluating models with a finite hypothesis class may be less computationally intensive.

|

- Increased Complexity: Dealing with an infinite hypothesis class can introduce computational challenges in terms of training and evaluation.

- Higher Computational Cost: Training and evaluating models with an infinite hypothesis class may require more resources.

|

| Theoretical Analysis |

- More Conclusive Results: Theoretical analysis, such as deriving generalization bounds, may be more straightforward due to the finite nature of the hypothesis class.

- Well-Defined Bounds: It may be easier to establish bounds on the algorithm's performance.

|

- Complex Analysis: Theoretical analysis becomes more intricate due to the infinite nature of the hypothesis space.

- Generalization Bounds Challenges: Establishing precise generalization bounds may be more challenging in the presence of an infinite hypothesis class.

|

| Flexibility |

- Restricted Representational Power: A finite hypothesis class is limited in the types of functions or relationships it can represent. There are only a finite number of hypotheses to choose from, which may not be sufficient to capture complex patterns in the data.

- Potential Underfitting: Due to its limited expressiveness, a finite hypothesis class may struggle to fit the training data well, leading to underfitting. Underfitting occurs when the model is too simplistic to capture the true underlying structure of the data.

- Biased Representations: The finite nature of the hypothesis class may introduce bias into the model, as it may not be able to adequately represent certain patterns or relationships present in the data.

- Simplicity Trade-off: While a finite hypothesis class is computationally more manageable, there is a trade-off with model simplicity. The model may be too simplistic to handle complex real-world datasets.

|

- Adaptability: An infinite hypothesis class allows for greater flexibility in adapting to complex and nuanced patterns in the data.

- Risk of Overfitting: There's a risk of overfitting as the model may have the capacity to fit the training data too closely.

|

============================================

|