Epoch in Machine Learning

- Python for Integrated Circuits - - An Online Book - |

||||||||

| Python for Integrated Circuits http://www.globalsino.com/ICs/ | ||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | ||||||||

================================================================================= In machine learning, an "epoch" refers to one complete pass through the entire training dataset during the training of a neural network or another machine learning model. During each epoch, the model processes each example in the training dataset once, updating its internal parameters (weights and biases) based on the errors it makes when making predictions on the training data. Here's how the training process typically works over multiple epochs:

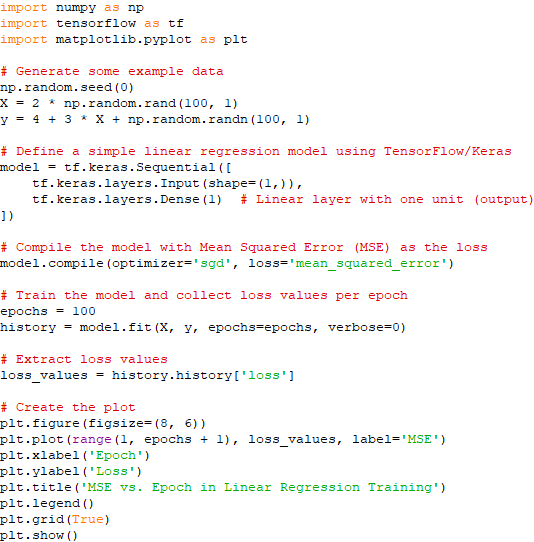

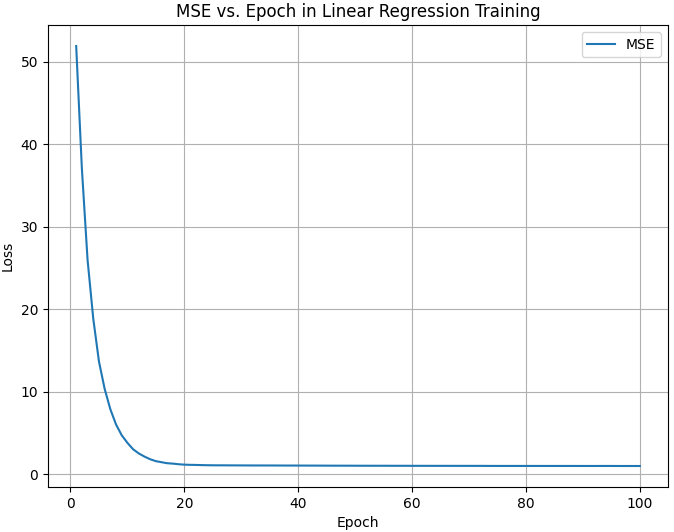

The choice of the number of epochs is a hyperparameter that machine learning practitioners need to tune. Too few epochs may result in an underfit model that doesn't capture the underlying patterns in the data, while too many epochs can lead to overfitting, where the model learns the noise in the training data and performs poorly on unseen data. Monitoring metrics like the training loss and validation loss over epochs is crucial to determine when the model has learned as much as it can from the training data without overfitting. Techniques like early stopping can be employed to stop training when the validation loss starts increasing, indicating the onset of overfitting. ============================================ To plot the loss (MSE) versus epoch for a machine learning training process, we typically need training data and a model to train. Here's a Python script using the popular deep learning library TensorFlow and its Keras API to demonstrate how to create such a plot for a simple linear regression model. Code: In this script:

Table 3955. Application of Epoch concept.

=====================

|

||||||||

| ================================================================================= | ||||||||

|

|

||||||||