Negative Log Likelihood (NLL) - Python and Machine Learning for Integrated Circuits - - An Online Book - |

||||||||

| Python and Machine Learning for Integrated Circuits http://www.globalsino.com/ICs/ | ||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | ||||||||

================================================================================= Negative log likelihood (NLL) is a commonly used mathematical function in the field of statistics and machine learning, particularly in the probability models and maximum likelihood estimation. It is used to measure the goodness of fit between a probability distribution (usually a model's predicted distribution) and a set of observed data points because of:

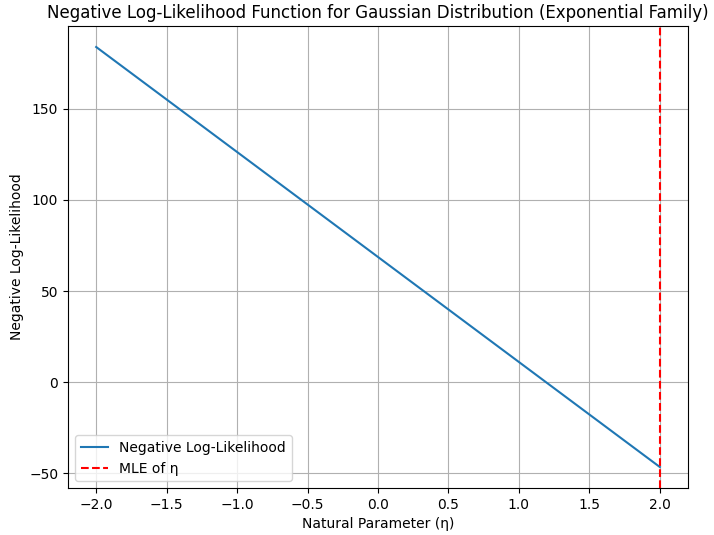

The idea behind using the negative log likelihood as a loss function is to find the model parameters (θ) that maximize the likelihood of observing the given data. Maximizing the likelihood is equivalent to minimizing the negative log likelihood. Figure 3863a shows the negative log likelihood (NLL), where you would need to negate the log-likelihood values. The NLL is often used in optimization problems because minimizing it is equivalent to maximizing the likelihood.

============================================

|

||||||||

| ================================================================================= | ||||||||

|

|

||||||||