=================================================================================

Regression analysis is a form of predictive modelling technique which investigates the relationship between a dependent (target) and independent variable (s) (predictor). Regression algorithms are a class of supervised machine learning algorithms used for predicting continuous values. This technique is used to forecast time series modelling and to find the causal effect relationship between the variables.

Some common regression algorithms includes:

-

Linear regression. -

Polynomial regression. -

Ridge Regression. -

Lasso Regression. -

ElasticNet Regression.

-

Decision tree regression. -

Random forest regression. -

Support vector regression (SVR). -

Gradient Boosting Regression (e.g., XGBoost, LightGBM, CatBoost). -

Neural Network Regression.

Scikit-learn and TensorFlow can be used to solve problems related to regression.

Cleaning missing data should be added to the pipeline to make sure that the dataset is complete when you are creating a training pipeline for a regression model so that you don’t need to perform various operations to fix the data. Cleaning missing data helps to check data for missing values and then perform various operations to fix the data or insert new values. In this case, the goal of such cleaning operations is to prevent problems caused by missing data that can arise when training a model.

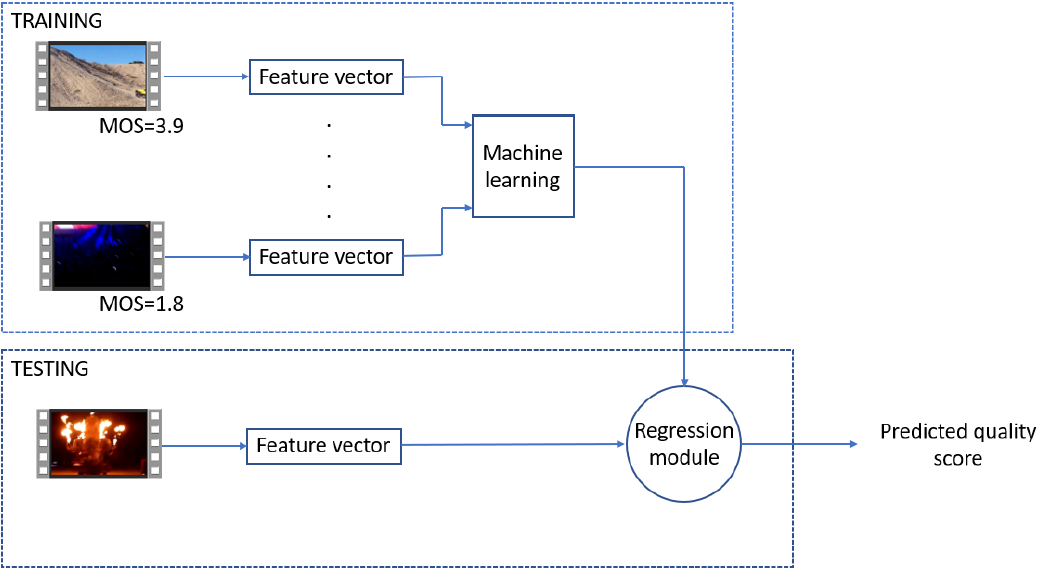

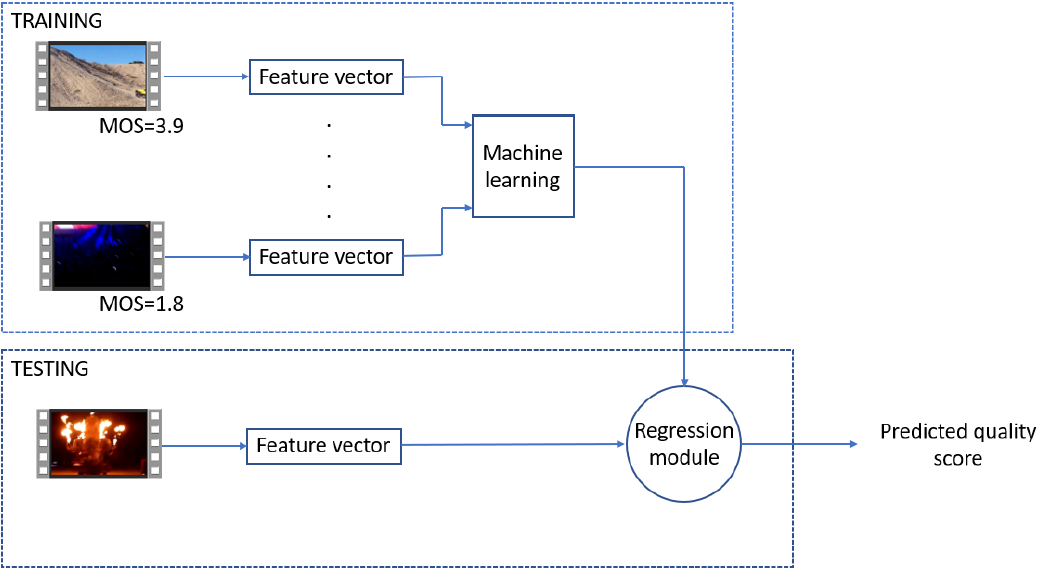

| Figure 3856a. High-level overview of the proposed ML method in the publication. Video-level feature vectors are extracted from the training videos to train a machine-learning model.. [1] |

Data from a tf.data.Dataset can be taken to refactor linear regression, and then implement stochastic gradient descent with it. In this case, the dataset will be synthetic and read by the tf.data API directly from memory, and then tf.data API is used to load a dataset when the dataset resides on disk. The steps for this application are:

iv.a)

Use tf.data to read data from memory.

iv.b)

Use tf.data in a training loop.

iv.c)

Use tf.data to read data from disk.

iv.d)

Write production input pipelines with features engineering (batching, shuffling, etc.)

Regression is a statistical technique used for modeling the relationship between a dependent variable and one or more independent variables. It is commonly used for predicting continuous outcomes. Here are some examples that belong to a regression situation and some that do not:

Some regression applications are:

-

Predicting House Prices: Given features like square footage, number of bedrooms, and location, you can use regression to predict the price of a house, which is a continuous variable.

-

Forecasting Stock Prices: Regression can be used to predict the future price of a stock based on historical data and various financial indicators.

-

Salary Prediction: Using factors such as education, years of experience, and job type, you can predict a person's salary.

-

Temperature Prediction: You can use regression to predict future temperatures based on historical weather data and other factors.

-

GPA Prediction: Given a student's study hours, attendance, and other factors, you can predict their final GPA.

-

Stock Price Forecasting: Predicting future stock prices or returns based on historical stock price data, trading volume, and economic indicators.

-

Demand Forecasting: Estimating future product or service demand, which is critical for inventory management and supply chain optimization.

-

Healthcare Outcome Prediction: Predicting patient outcomes, such as disease progression, mortality, or readmission rates, based on medical history, vital signs, and laboratory results.

-

Customer Churn Prediction: Identifying customers who are likely to leave a subscription service or cancel a contract based on usage patterns and customer demographics.

-

Energy Consumption Forecasting: Predicting energy consumption for efficient resource allocation in areas like electricity demand forecasting.

-

Crop Yield Prediction: Estimating agricultural crop yields based on factors like weather conditions, soil quality, and farming practices.

-

Quality Control: Predicting product quality or defects in manufacturing processes by analyzing sensor data and process parameters.

-

Environmental Modeling: Predicting air quality, water pollution levels, or weather patterns using sensor data and historical information.

-

Economic Forecasting: Predicting economic indicators such as GDP growth, inflation rates, or unemployment rates based on historical economic data and external factors.

-

Customer Lifetime Value Prediction: Estimating the long-term value of a customer to a business based on their historical purchasing behavior.

-

Insurance Risk Assessment: Assessing the risk of insurance claims by predicting the likelihood and cost of future claims based on policyholder information.

-

Traffic Flow Prediction: Predicting traffic congestion and flow patterns on road networks for urban planning and traffic management.

-

Sports Performance Analysis: Predicting athlete performance, such as running times or sports scores, based on training data, weather conditions, and other relevant factors.

Does Not Belong to Regression:

-

Classification of Email as Spam or Not: This is a classification problem, not regression. You're trying to categorize emails into discrete classes (spam or not spam).

-

Customer Churn Prediction: Predicting whether a customer will churn (leave) a service provider is a classification problem. You're classifying customers into two groups: churned or not churned.

-

Gender Prediction: Given certain characteristics, predicting whether an individual is male or female is a classification task, not regression.

-

Car Make and Model Recognition: Identifying the make and model of a car in an image is an object recognition problem, which falls under image classification or object detection, not regression.

-

Customer Satisfaction Rating: While you may use regression to predict the customer satisfaction score based on various factors, this score is typically a discrete value (e.g., 1 to 5), so it can be treated as a classification problem or ordinal regression.

Machine learning techniques encompass a wide range of algorithms and approaches for solving various types of problems. Here are some examples of situations where different machine learning techniques might be used:

Supervised Learning:

-

Linear Regression: Predicting the price of a house based on its features, like square footage and number of bedrooms.

-

Logistic Regression: Predicting whether a customer will buy a product (yes/no) based on their browsing behavior and demographic information.

-

Decision Trees: Determining if a loan application should be approved based on an applicant's credit score, income, and other factors.

-

Random Forest: Classifying whether an email is spam or not based on its content and sender.

-

Support Vector Machines (SVM): Identifying whether an image contains a cat or a dog based on image features.

Unsupervised Learning:

-

K-Means Clustering: Grouping customers into segments based on their purchase behavior for targeted marketing.

-

Hierarchical Clustering: Organizing documents into a hierarchical structure based on their textual content.

-

Principal Component Analysis (PCA): Reducing the dimensionality of a dataset for visualization or feature selection.

-

Association Rule Mining: Discovering patterns in market basket data to suggest product recommendations.

Reinforcement Learning:

-

Game AI: Training a computer program to play games like chess, Go, or video games.

-

Autonomous Vehicles: Teaching self-driving cars to navigate safely through traffic.

-

Robotics: Developing robots that can learn to perform tasks like picking and placing objects.

Natural Language Processing (NLP):

-

Sentiment Analysis: Analyzing social media data to determine public sentiment towards a product or event.

-

Text Classification: Categorizing news articles into topics like politics, sports, or entertainment.

-

Named Entity Recognition: Extracting names of people, organizations, and locations from a text.

Deep Learning:

-

Convolutional Neural Networks (CNNs): Image classification tasks, such as identifying objects in images or recognizing faces.

-

Recurrent Neural Networks (RNNs): Language modeling, speech recognition, and time series prediction.

-

Generative Adversarial Networks (GANs): Generating realistic images, such as deepfake videos or art.

-

Transformer Models: Natural language understanding and generation, as seen in models like BERT and GPT.

Regression machine learning techniques are also applied in the semiconductor industry for various purposes. Here are some examples of their use in this field:

-

Wafer Defect Prediction: Predicting the likelihood and location of defects on semiconductor wafers during manufacturing processes. Regression models can analyze historical data to identify factors contributing to defects and improve yield.

-

Equipment Maintenance Prediction: Predicting the maintenance needs of semiconductor manufacturing equipment based on sensor data and historical maintenance records. This helps in preventing costly equipment breakdowns.

-

Process Optimization: Optimizing semiconductor manufacturing processes by modeling the relationship between process parameters (e.g., temperature, pressure, time) and the quality of the fabricated chips. Regression helps in finding the optimal settings.

-

Yield Improvement: Predicting and improving the yield of semiconductor chips by identifying and addressing factors that affect the number of good chips produced per wafer.

-

Product Quality Prediction: Predicting the quality of semiconductor chips or components based on various process variables and characteristics. This ensures that only high-quality products are shipped to customers.

-

Throughput Prediction: Predicting the throughput of semiconductor fabrication facilities to manage production schedules and optimize resource allocation.

-

Energy Consumption Optimization: Predicting energy consumption in semiconductor manufacturing plants to optimize energy usage, reduce costs, and minimize environmental impact.

-

Supply Chain Forecasting: Predicting semiconductor demand and supply chain requirements to ensure the availability of materials and components for production.

-

Equipment Calibration: Predicting the required calibration adjustments for manufacturing equipment to maintain precision and accuracy in semiconductor fabrication processes.

-

Material Usage Efficiency: Predicting material usage and waste generation during manufacturing to reduce material costs and minimize waste disposal.

-

Die Size Estimation: Predicting the size and dimensions of semiconductor dies based on design parameters, which is crucial for yield estimation and cost optimization.

-

Failure Analysis: Predicting potential failure modes and root causes of semiconductor device failures based on test data, enabling more effective failure analysis and debugging.

Regression techniques in the semiconductor industry often involve large datasets, complex process variables, and the need for high precision and reliability, making machine learning an important tool for optimizing manufacturing processes and improving product quality.

Regression machine learning techniques are commonly used in various applications within the field of electrical and computer engineering. Here are some examples:

-

Power Consumption Prediction: Predicting the power consumption of electronic devices, circuits, or systems based on their design specifications and usage patterns. This is essential for energy-efficient design and management.

-

Analog Circuit Design: Optimizing the parameters of analog circuits, such as amplifiers or filters, to meet specific performance criteria, like gain, bandwidth, or noise figure.

-

Fault Detection in Electronics: Identifying faults or anomalies in electronic circuits or systems by analyzing sensor data or measurements, enabling proactive maintenance and fault diagnosis.

-

Signal Processing: Regression can be used for various signal processing tasks, such as noise reduction, feature extraction, or speech recognition, where the goal is to predict or estimate certain signal characteristics.

-

VLSI Yield Prediction: Predicting the yield of Very Large Scale Integration (VLSI) chips during the manufacturing process based on process parameters and design features.

-

IC Thermal Management: Predicting the temperature distribution within integrated circuits (ICs) to optimize thermal management strategies and prevent overheating.

-

Antenna Design: Optimizing the design parameters of antennas, such as dimensions and materials, to achieve desired performance characteristics, like radiation pattern or bandwidth.

-

Network Performance Prediction: Predicting network performance metrics like latency, throughput, or packet loss based on network configuration and traffic patterns, aiding in network optimization.

-

Computer Vision and Image Processing: Regression models are used for tasks such as image denoising, object tracking, and facial recognition, where the goal is to predict or estimate features within images or video streams.

-

Hardware Design Optimization: Predicting the performance of hardware designs, including CPUs, GPUs, or custom accelerators, to optimize their architecture and parameters for specific applications.

-

Audio and Speech Processing: Regression techniques can be applied to tasks like audio source separation, music genre classification, and speech synthesis.

-

Robotics: Regression is used in robotics for tasks such as robot localization, mapping, and path planning, where the goal is to predict the robot's position or plan optimal paths.

-

Wireless Communication: Predicting link quality, channel conditions, and interference levels in wireless communication systems to improve network planning and resource allocation.

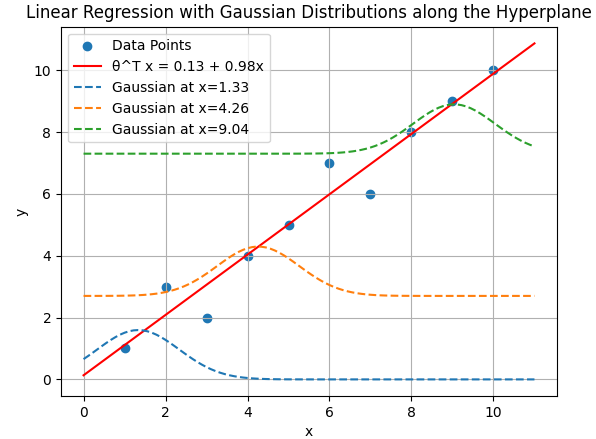

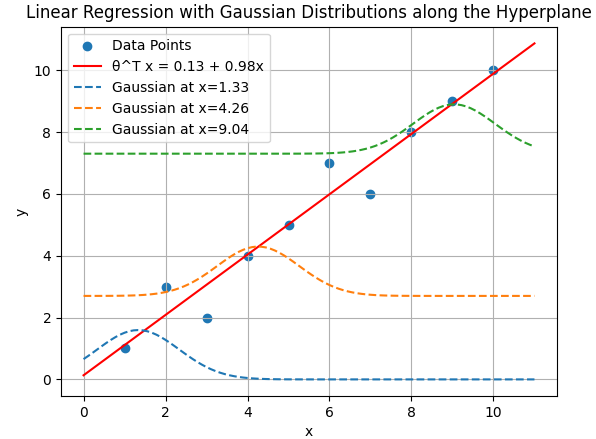

Figure 3856b shows linear regression plotted with hyperplane η = θT x. For Gaussian distribution, η is equal to μ. (see page3868) The Gaussian distributions are along the hyperplane.

Figure 3856b. Linear regression plotted with hyperplane θT x (Python code).

============================================

|