Geometric Margin in ML - Python and Machine Learning for Integrated Circuits - - An Online Book - |

||||||||

| Python and Machine Learning for Integrated Circuits http://www.globalsino.com/ICs/ | ||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | ||||||||

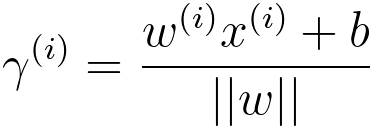

================================================================================= In machine learning, the geometric margin is a concept used primarily in support vector machines (SVMs) and is related to the idea of finding a hyperplane that best separates different classes of data points. The geometric margin is a measure of how well-separated the classes are by the decision boundary (hyperplane) created by an SVM. It is defined as the distance between the hyperplane and the nearest data point from either class, considering the closest data point from both classes. The geometric margin is denoted as γ (gamma). In a well-posed SVM problem, the goal is to maximize γ. Mathematically, the geometric margin (γ) can be expressed as: γ = 1 / ||w|| -------------------------------------- [3815a] Where:

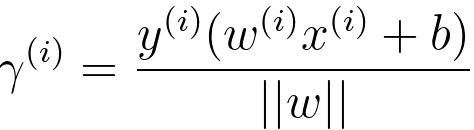

For the SVM case, the geometric margin at (x(i), y(i)) is given by, where:

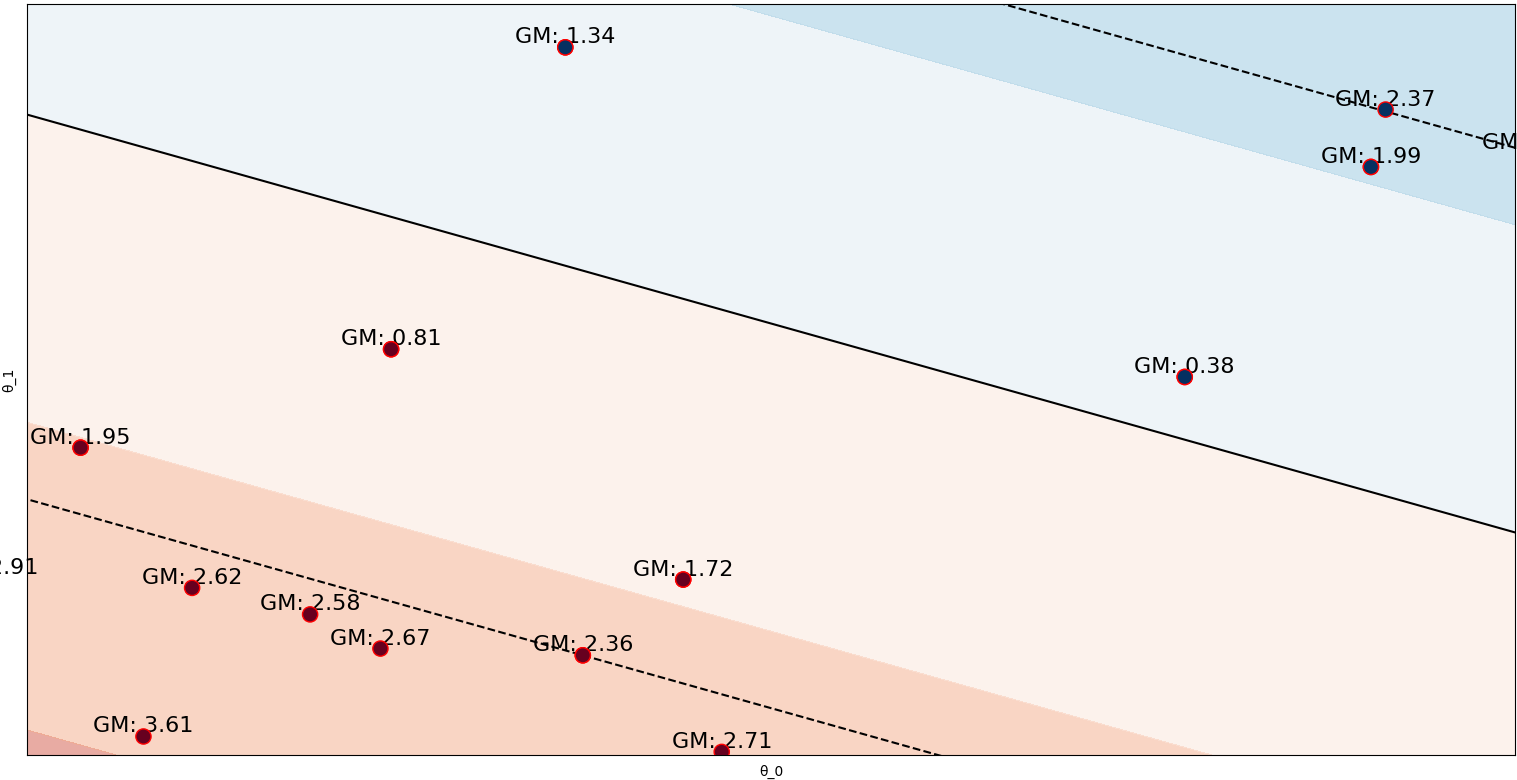

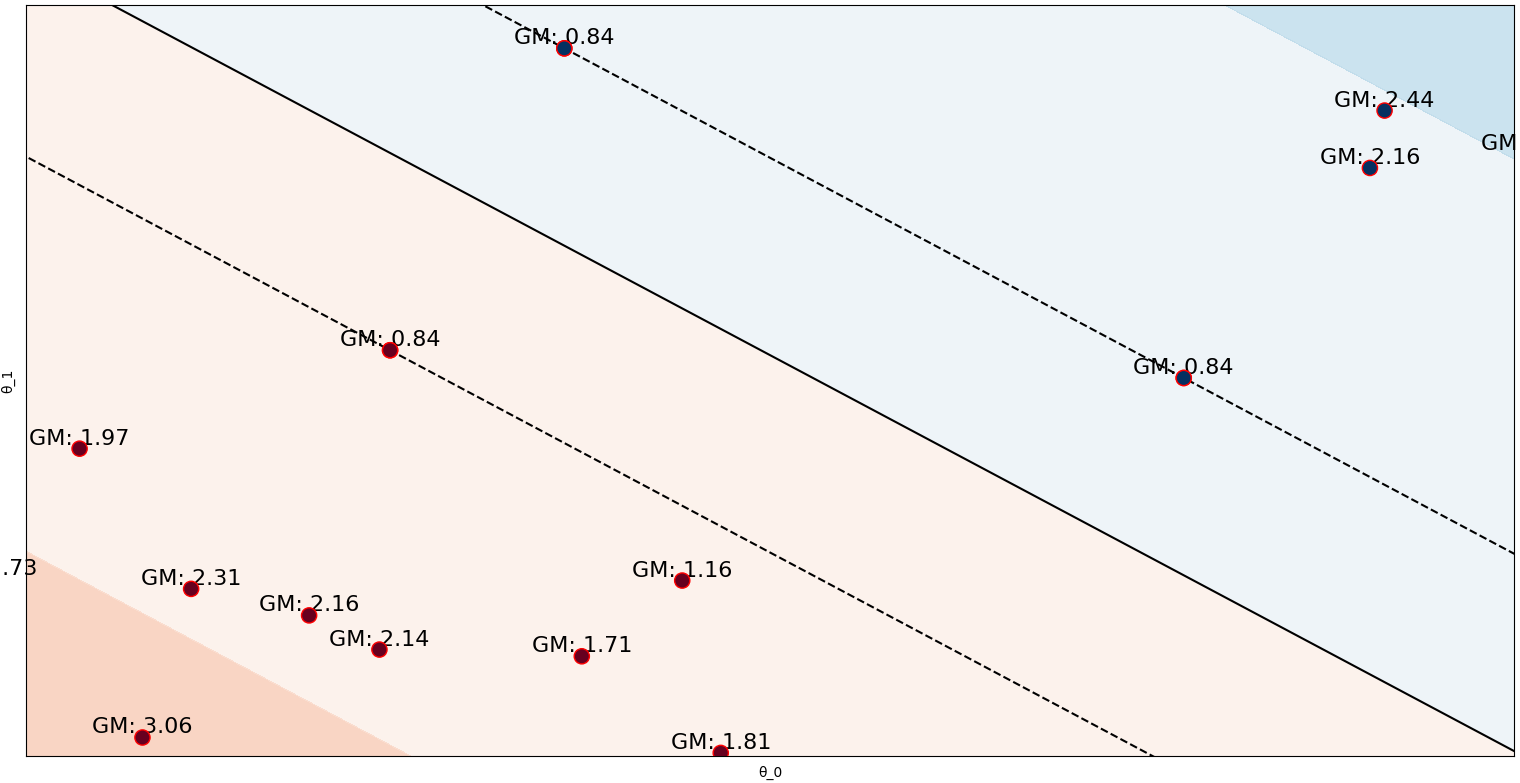

The signed distance from the decision boundary to the data point (x(i)). It indicates which side of the hyperplane the data point falls on. If ŷ(i) is positive, it means the data point is correctly classified, and if it's negative, it's misclassified. Equation 3815c below provides a more general expression for ŷ(i), which includes the class label y(i). This is an extension to handle both sides of the decision boundary. If y(i) is positive, the data point should have a positive ŷ(i) to be correctly classified, and if y(i) is negative, ŷ(i) should be negative for correct classification. In practice, the SVM algorithm finds the hyperplane that maximizes this margin by solving a constrained optimization problem. The key idea is to find the hyperplane that not only separates the data but also maximizes this margin, making it less sensitive to variations in the data and better at generalizing to unseen data. Figure 3815 shows the functional margin and geometric margin in two datasets.

(a)

(b)

============================================

|

||||||||

| ================================================================================= | ||||||||

|

|

||||||||

-------------------------------------- [3815b]

-------------------------------------- [3815b]  -------------------------------------- [3815c]

-------------------------------------- [3815c]