Gini Loss - Python Automation and Machine Learning for ICs - - An Online Book - |

||||||||

| Python Automation and Machine Learning for ICs http://www.globalsino.com/ICs/ | ||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | ||||||||

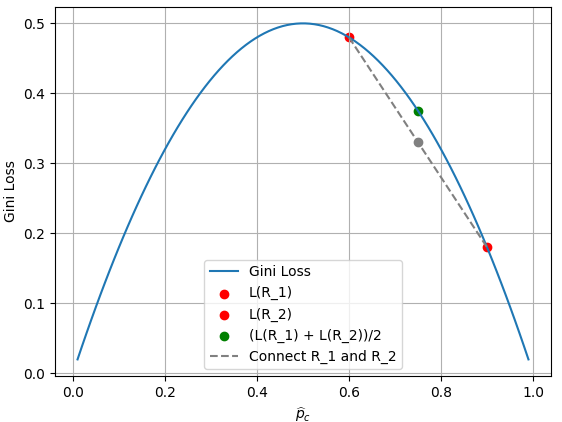

================================================================================= Gini Loss is often used as the criterion for splitting nodes in decision trees. It is calculated by taking the weighted sum of the Gini Impurities of the child nodes after a split. When building a decision tree, the algorithm selects the split that minimizes the Gini Loss. The Gini loss is given by, where,

Equation 3757a is used in decision trees and random forests. Assuming there are two children regions R1 and R2, Figure 3757a shows the plot of Gini loss in Equation 3757a.

============================================

|

||||||||

| ================================================================================= | ||||||||

|

|

||||||||

---------------------------------- [3757a]

---------------------------------- [3757a]