Number of Neurons and Layers in Neural Network - Python Automation and Machine Learning for ICs - - An Online Book - |

||||||||

| Python Automation and Machine Learning for ICs http://www.globalsino.com/ICs/ | ||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | ||||||||

================================================================================= The architecture of a neural network, including the number of neurons and layers, depends on the specific problem we are trying to solve. There's no one-size-fits-all answer, and it's not necessarily a solved problem, that is, nobody knows the right answer; it often involves a combination of domain knowledge, experimentation, and tuning. Some general considerations are:

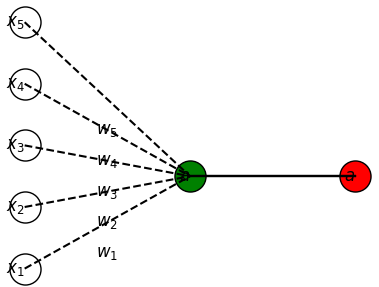

Figure 3724 shows a single neuron with its connections. The green ball represents the activation function, a = σ. Here, z is given by, z = w1x1+ w2x2 + ... + wnxn ------------------------------------------ [3724a] Figure 3724. A single neuron with its connections (Code). Equation 3724a is a linear combination, where is a weighted sum of variables () with corresponding weights (w1, w2, ..., wn). As the number of variables (n) increases, the weights () need to be smaller to prevent the output () from becoming too large, in order to prevent from vanishing and exploding gradients. The appropriate wi should be, Therefore, weight initialization (choosing appropriate initial values for weights) is crucial in mitigating the issues of vanishing and exploding gradients. The idea is that if weights are too large, gradients can explode; if they are too small, gradients can vanish. ============================================

|

||||||||

| ================================================================================= | ||||||||

|

|

||||||||

---------------------------------------------- [3724b]

---------------------------------------------- [3724b]