Weight Initialization - Python Automation and Machine Learning for ICs - - An Online Book - |

||||||||

| Python Automation and Machine Learning for ICs http://www.globalsino.com/ICs/ | ||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | ||||||||

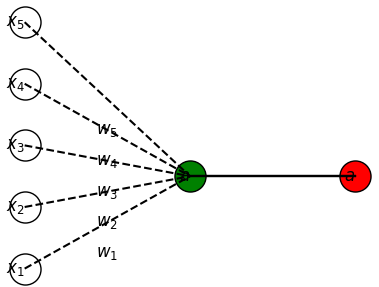

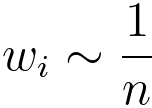

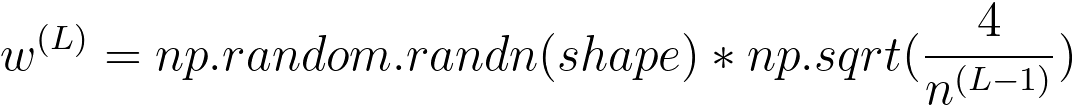

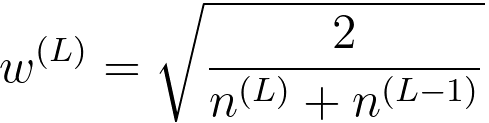

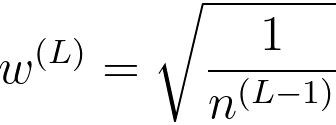

================================================================================= Figure 3713a shows a single neuron with its connections. The green ball represents the activation function, a = σ. Here, z is given by, z = w1x1+ w2x2 + ... + wnxn ------------------------------------------ [3713a] Figure 3724. A single neuron with its connections (Code). Equation 3713a is a linear combination, where is a weighted sum of variables () with corresponding weights (w1, w2, ..., wn). As the number of variables (n) increases, the weights () need to be smaller to prevent the output () from becoming too large, in order to prevent from vanishing and exploding gradients. The appropriate wi should be, Therefore, weight initialization (choosing appropriate initial values for weights) is crucial in mitigating the issues of vanishing and exploding gradients. The idea is that if weights are too large, gradients can explode; if they are too small, gradients can vanish. A very common weight initialization in a neural network is given by (code), where, represents the weights in the -th layer. generates random values from a normal distribution with mean 0 and standard deviation 1. The shape of the array is determined by the 'shape' parameter. The initialization in Equation 3713c works for sigmoid activations. He initialization is another initialization, which was designed with the rectified linear unit (ReLU) activation function, given by, Xavier/Glorot initialization, which can be used for Tanh, is given by, Equation 3713c shows random initialization. Random initialization of weights in a neural network is important for several reasons:

In programming, assuming we have a defined gradient_descent function, to plot a graph with weights (w) or parameters (θ) versus cost function, we often need to store each w or θ in a list. On the other hand, for the ML modeling, we only need to return the last w value. To do this, this code is implementing a gradient descent algorithm and plots the cost function over the iterations, and stores each value of w/θ in a list. However, only the last value is stored with reinitialization or overwriting inside a loop so that only the final value is preserved to use in the ML process. ============================================

|

||||||||

| ================================================================================= | ||||||||

|

|

||||||||

---------------------------------------------- [3713b]

---------------------------------------------- [3713b]  -------------------------- [3713c]

-------------------------- [3713c]  scales the randomly initialized values. This scaling factor is designed to take into account the number of input units in the previous layer (

scales the randomly initialized values. This scaling factor is designed to take into account the number of input units in the previous layer ( . The factor of 4 instead of 2 is based on the use of the rectified linear unit (ReLU) activation function.

. The factor of 4 instead of 2 is based on the use of the rectified linear unit (ReLU) activation function. -------------------------- [3713d]

-------------------------- [3713d]  -------------------------- [3713e]

-------------------------- [3713e]