|

||||||||

Precision and Recall Tradeoff - Python Automation and Machine Learning for ICs - - An Online Book - |

||||||||

| Python Automation and Machine Learning for ICs http://www.globalsino.com/ICs/ | ||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | ||||||||

================================================================================= Precision and recall are often in a tradeoff relationship, meaning that improving one may come at the expense of the other. This tradeoff is particularly evident when adjusting the threshold for classifying instances as positive or negative in a model.

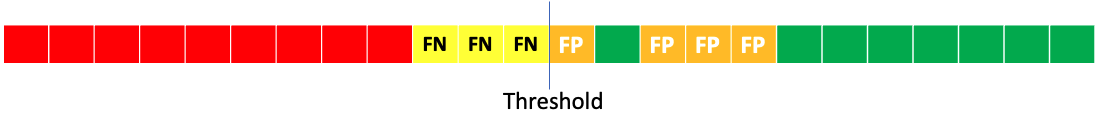

Finding the right balance depends on the specific goals and requirements of the application. In some scenarios, precision may be more critical, while in others, recall may take precedence. It's essential to consider the tradeoff based on the context and consequences of false positives and false negatives in a particular task. As an example, Figure 3600a shows the distribution of TN, FN, FP and TP of an example when the threshold is set at the middle.

Therefore, we can get,

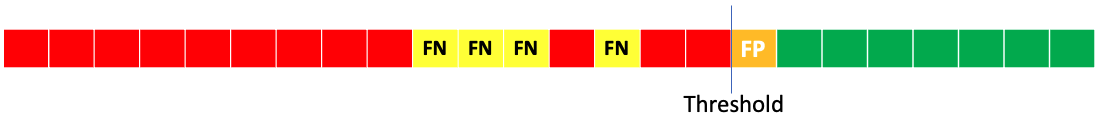

If we increase the threshold as shown in Figure 3600b, then we have more FN, but less FP.

Therefore, we can get,

As we can see, the precision has been increased when the threshold increases; however, the recall has not changed. In this case, the ones which have been classified to positive become more correct; however, it missed more positive examples. F1 Score can be used to find a suitable balance between precision and recall in a model. ============================================

|

||||||||

| ================================================================================= | ||||||||

|

|

||||||||