|

||||||||

Feedforward Neural Network - Python Automation and Machine Learning for ICs - - An Online Book - |

||||||||

| Python Automation and Machine Learning for ICs http://www.globalsino.com/ICs/ | ||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | ||||||||

================================================================================= A feedforward neural network is a type of artificial neural network where the flow of information is unidirectional, moving in one direction—from the input layer through the hidden layers (if any) to the output layer. In this architecture, there are no cycles or loops in the connections between nodes, meaning that the data moves forward without feedback connections. The components of a feedforward neural network are:

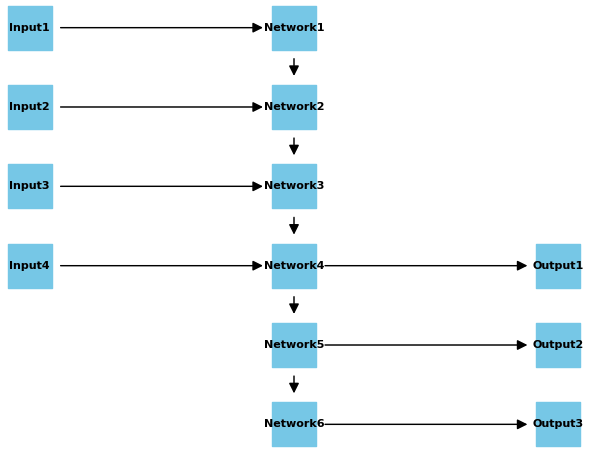

Figure 3542 shows a neural network with parallel inputs, several layers, and parallel outputs. It's a basic representation of a feedforward neural network with multiple hidden layers.

Figure 3542. Feedforward neural network with multiple hidden layers (code). The training process involves adjusting the weights and biases based on the difference between the predicted output and the actual target. This is typically done using optimization algorithms like gradient descent. Feedforward neural networks are the foundation of deep learning models, where multiple hidden layers allow the network to learn hierarchical features and representations. They are widely used in various applications, including image and speech recognition, natural language processing, and many others.

============================================

|

||||||||

| ================================================================================= | ||||||||

|

|

||||||||