|

||||||||

n-grams - Python Automation and Machine Learning for ICs - - An Online Book - |

||||||||

| Python Automation and Machine Learning for ICs http://www.globalsino.com/ICs/ | ||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | ||||||||

================================================================================= An n-gram is a contiguous sequence of n items (usually words) from a given sample of text. N-grams can be a useful and more data-driven approach for certain aspects of natural language processing (NLP), especially when considering the statistical relationships between words in a sequence. N-grams represent contiguous sequences of n items (typically words) from a sample of text. Some reasons why n-grams can be a helpful solution are:

However, note that while n-grams are effective for certain aspects of language modeling, they have limitations:

A couple of examples of different n-grams are:

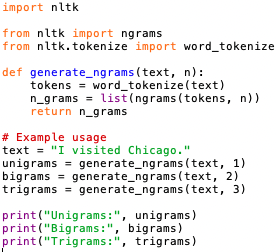

For instance, in a bigram model, we might calculate the probability of a word given its preceding word. In the example sentence "I visited Chicago," the bigram probabilities could help predict the likelihood of "visited" given "I" or "Chicago" given "visited." A simple Python code to generate n-grams (unigram, bigram and trigram) from a given text using NLTK is (code): The output of the code above is: In this example above, ngrams from NLTK is used to generate unigrams, bigrams, and trigrams from the input text. The word_tokenize function is used to tokenize the input text into words. ============================================

|

||||||||

| ================================================================================= | ||||||||

|

|

||||||||