|

|||||||||||||||

Machine Learning Algorithms - Python Automation and Machine Learning for ICs - - An Online Book: Python Automation and Machine Learning for ICs by Yougui Liao - |

|||||||||||||||

| Python Automation and Machine Learning for ICs http://www.globalsino.com/ICs/ | |||||||||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | |||||||||||||||

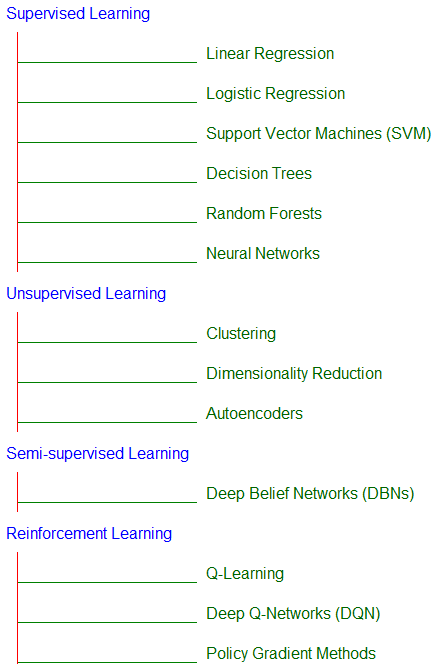

================================================================================= Figure 3369a presents some standard machine learning algorithms.

Figure 3369a. Some standard machine learning algorithms (Code). Table 3369a lists some standard machine learning algorithms to choose. Table 3369a. Some "standard" machine learning algorithms to choose.

Machine learning models, like many technologies, will likely never be perfect. They are designed and trained to approximate or generalize from the data they are given, which inherently includes limitations and imperfections. Models can be very effective for a wide range of tasks, but they may still make errors, struggle with complex nuances, or fail in unpredictable ways, especially when confronted with scenarios that deviate from their training data. Their performance can continually improve, but achieving absolute perfection is unlikely due to these inherent constraints. ===========================================

|

|||||||||||||||

| ================================================================================= | |||||||||||||||

|

|

|||||||||||||||