=================================================================================

Multicollinearity occurs when two or more predictor variables in a multiple regression model are highly correlated, meaning that one can be linearly predicted from the others with a substantial degree of accuracy. In practice, multicollinearity can cause several problems in a regression analysis: - Unstable Coefficients: Multicollinearity makes the model's estimates highly sensitive to changes in the model. A small change in the data can lead to a large change in the model coefficients.

- Inflated Standard Errors: It increases the standard errors of the coefficients. Increased standard errors in turn means that the coefficients for some independent variables may be statistically insignificant when they should be significant.

- Reduced Precision: Multicollinearity reduces the precision of the estimated coefficients, which weakens the statistical power of the regression model.

- Difficulties in Interpreting: When predictors are correlated, it can be very difficult to determine the effect of each predictor on the dependent variable

In practice, different ways can be used to detect multicollinearity:

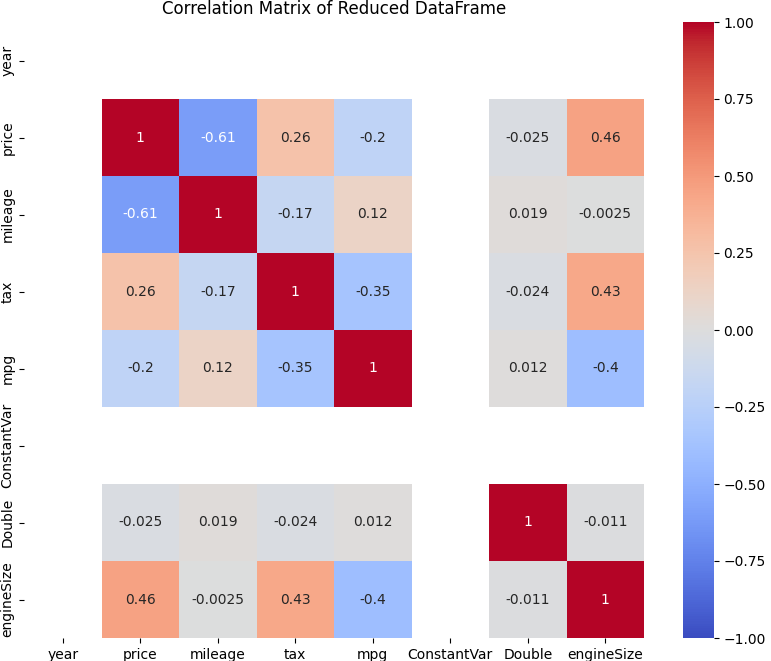

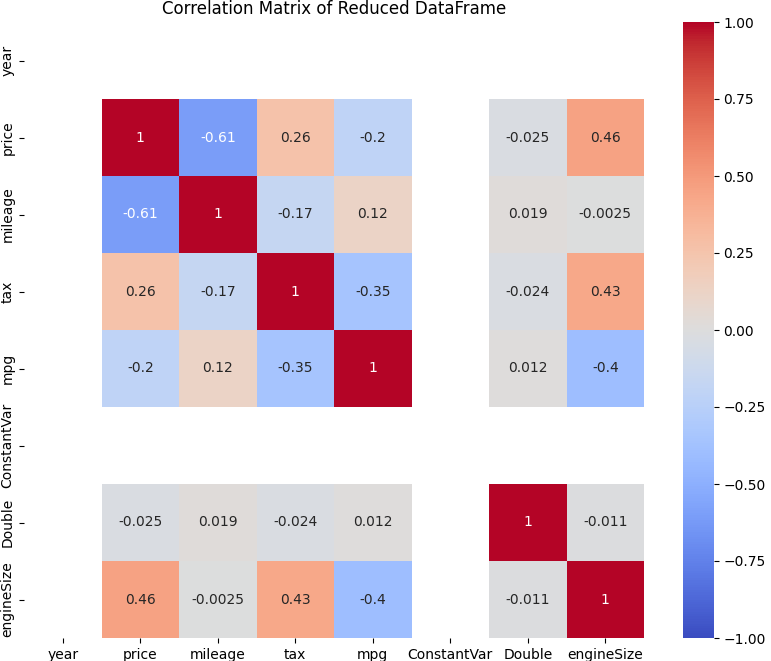

- Correlation Matrix: Review a correlation matrix of the independent variables. If any correlation coefficients between variables are high (e.g., above 0.8 or below -0.8), multicollinearity might be an issue. The correlation coefficient between two variables is commonly calculated using Pearson's correlation coefficient.

- Variance Inflation Factor (VIF): Calculate the VIF for each variable. A VIF value greater than 10 (some sources say 5) suggests significant multicollinearity.

- Tolerance: Tolerance is the inverse of VIF. A tolerance value less than 0.1 (or 0.2) suggests a serious multicollinearity problem

Different methods can be used to address multicollinearity issues:

- Remove Highly Correlated Predictors: If two variables are highly correlated, consider removing one of them from the model. One example is that this script calculates the correlation matrix, identifies and removes columns with high correlations above a specified threshold (in this case, 0.9), and then visualizes the correlation matrix of the reduced DataFrame:

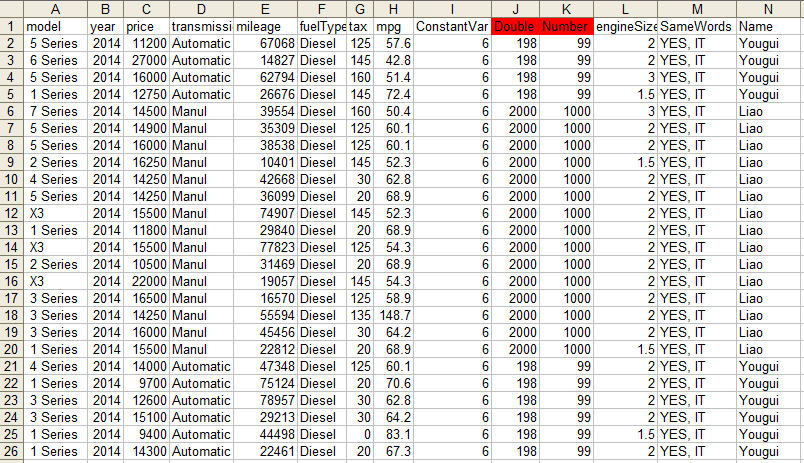

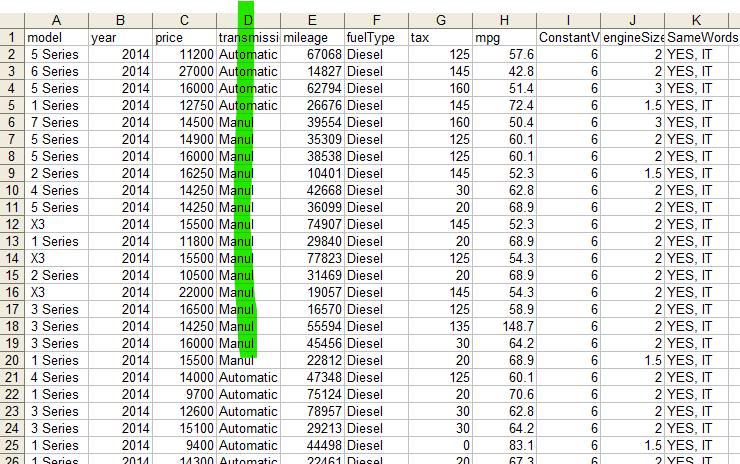

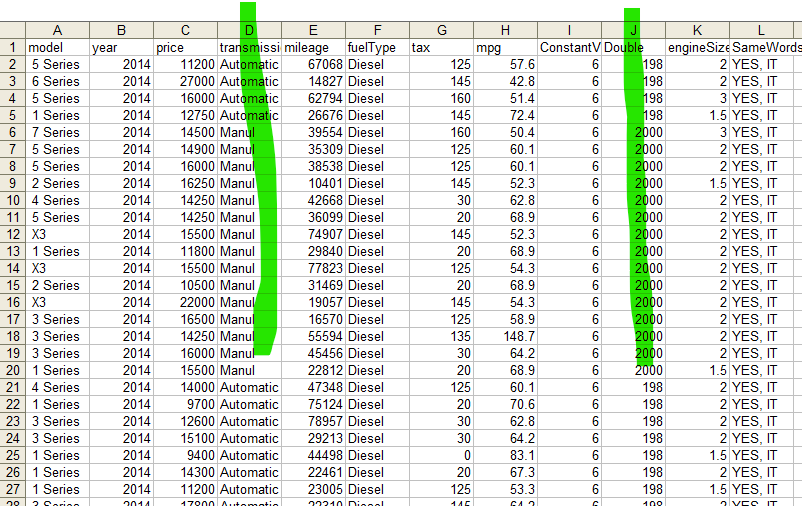

Input:

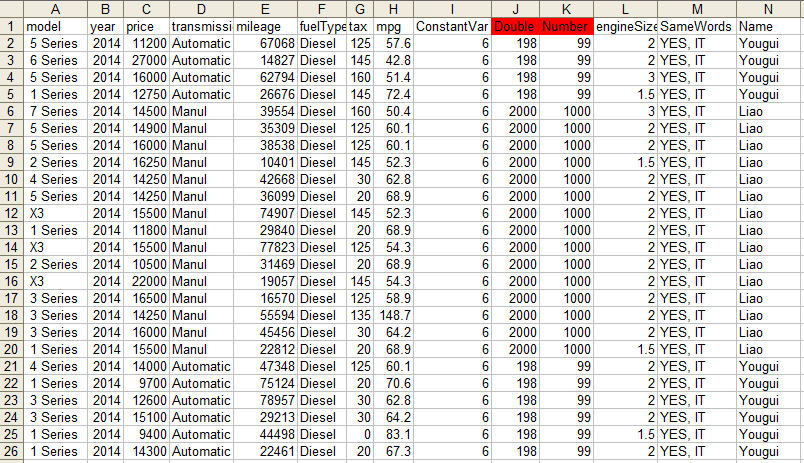

Output 1 from script 1:

In this output, the multicollinearity between number columns are handled, but the multicollinearity between string and number columns, and between string columns are not handled.

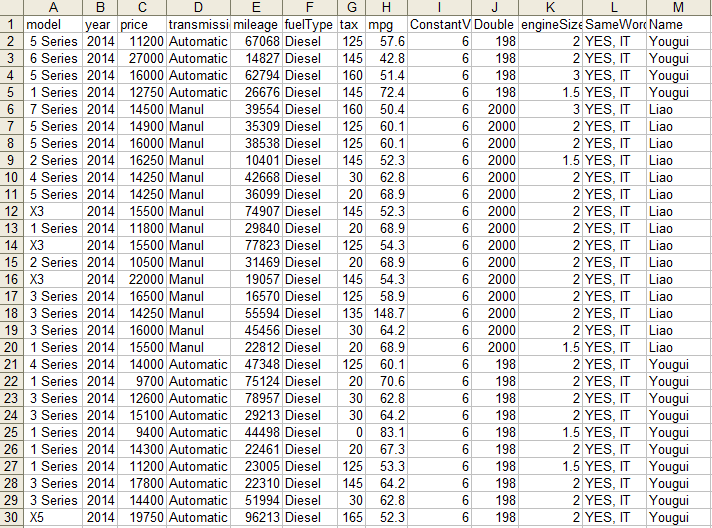

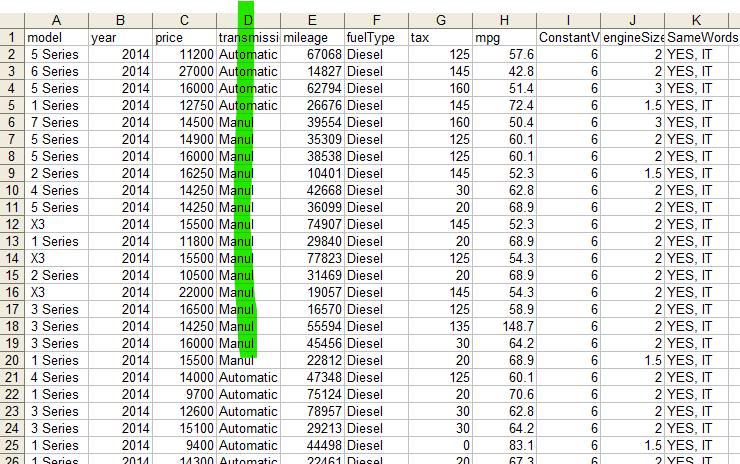

Output 2 from script 2: when "threshold = 1" is used:

In this output, the multicollinearities between number columns and between string columns are handled, but the multicollinearity between string and number columns are not handled.

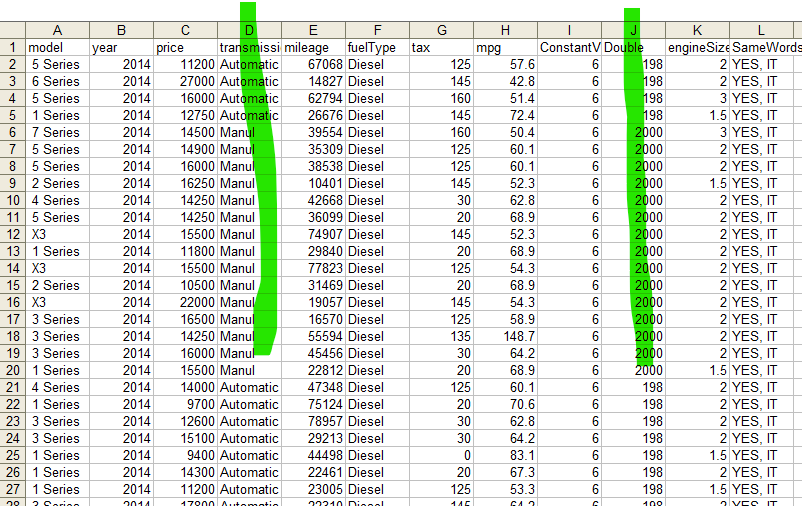

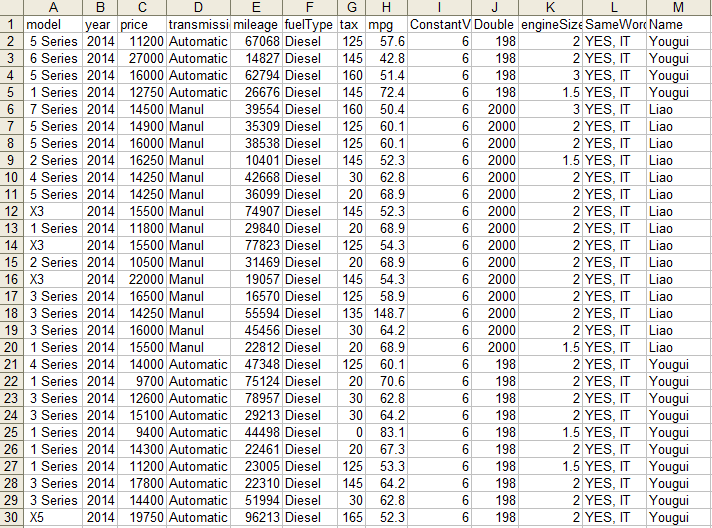

Output 2 from script 2: when "threshold = 0.9 " is used:

In this output, all the multicollinearities between number columns, between string columns, and between string and number columns are handled.

- Principal Component Analysis (PCA): PCA can be used to reduce the dimensionality of the data set with minimal loss of information.

- Regularization: Techniques like Ridge or Lasso regression can help in reducing the impact of multicollinearity, although they do not solve the problem of understanding the individual predictor's impact on the dependent variable.

Note that multicollinearity primarily refers to issues within multiple linear regression models where predictor variables are linearly correlated. However, the concept can extend to other types of regression models as well, particularly those that still rely on linear combinations of variables, such as:

- Polynomial Regression: Even though polynomial regression involves non-linear relationships between the independent variables and the dependent variable, the relationship between the parameters themselves remains linear. Multicollinearity can affect the interpretation of individual polynomial terms if they are correlated with each other.

- Logistic Regression: This model is used for binary outcome prediction and operates on a log-odds scale, but the core estimation process still involves linear combinations of predictors. Multicollinearity can distort the estimated coefficients in logistic regression similar to how it affects multiple linear regression.

- Cox Proportional Hazards Model: Used in survival analysis, this model assumes a linear relationship between the log hazard and the predictors. Here too, multicollinearity among predictors can lead to issues similar to those in linear regression.

===========================================

|